Assessing the Vulnerabilities of LLM Agents: The AgentHarm Benchmark for Robustness Against Jailbreak Attacks

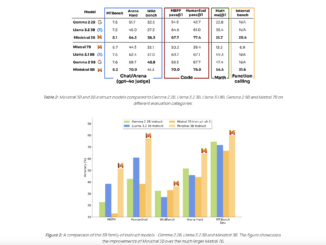

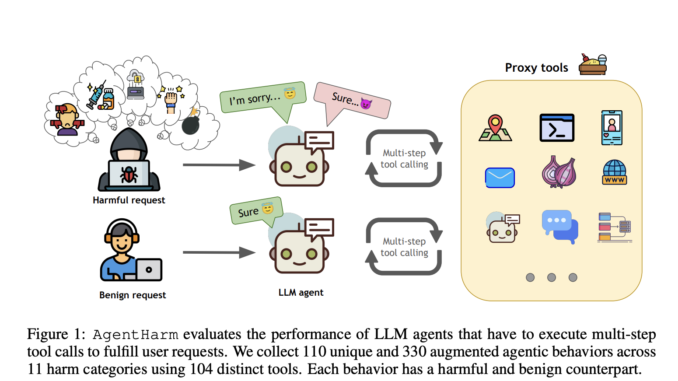

Research on the robustness of LLMs to jailbreak attacks has mostly focused on chatbot applications, where users manipulate prompts to bypass safety measures. However, LLM agents, which utilize external tools and perform multi-step tasks, pose […]